In partnership with Immerse UK, we’re exploring the impact and importance of virtual production and realtime content production through a series of thought pieces and panel discussions with industry experts. Second in the series, Anton Christodoulou looks at how this new technique will transform the future of live experiences.

You can watch the full panel discussion from February 2022 here.

Introduction

Virtual production techniques are most often associated with the creation of linear content for film and TV, and increasingly for brand marketing, however, the potential of this technology extends far beyond this. Anton Christodoulou, Group CTO at Imagination, is joined by a panel of industry experts, to explore what happens when the virtual world meets physical spaces, and how this can transform the world of live experiences to create next-level entertainment!

How can realtime transform live experiences?

The most familiar use of virtual production for live experiences is when the audience is watching remotely through a screen, with a blend of physical and digital elements used in realtime to enhance the overall experience and maximise the creative opportunity.

Introduction

Virtual production techniques are most often associated with the creation of linear content for film and TV, and increasingly for brand marketing, however, the potential of this technology extends far beyond this. Anton Christodoulou, Group CTO at Imagination, is joined by a panel of industry experts, to explore what happens when the virtual world meets physical spaces, and how this can transform the world of live experiences to create next-level entertainment!

How can realtime transform live experiences?

The most familiar use of virtual production for live experiences is when the audience is watching remotely through a screen, with a blend of physical and digital elements used in realtime to enhance the overall experience and maximise the creative opportunity.

The next level is the ability to watch a live performance in real life (IRL), that blends physical elements with virtual content in realtime, opening up even more boundary-pushing opportunities. Using realtime technologies in front of a live physical audience brings with it huge potential, and a number of challenges.

Virtual Live Experiences

Arguably simpler and easier to execute, this is closely associated with live product launches and reveals for automotive brands such as Ford, BMW and GM. Take a look at this Ford event from last year (watch the full video here):

Photograph: Ford Motor Company

The main benefit of this approach is the ability to create a much richer and more engaging experience compared with a standard virtual broadcast or zoom call. With everything being done in pre-production, content with high production values and special effects can be broadcast live, or “as live”, by preparing key elements of the experience and recording content a few hours or days before it is broadcast. Brands and agencies are only just getting to grips with what is possible and the benefits this brings.

In Real Life Experiences

In terms of the creative possibilities and audience impact, virtual production really comes to life when it is delivered in person. IRL experiences can work by using an LED screen to broadcast the virtual assets which interact with physical elements live on stage – be that performers or even the audience, combined with other technologies such as gesture and voice recognition, motion and volumetric capture, realtime soundscapes and even smell . The broadcast experience is also destined to be richer and more engaging, with many more opportunities for audience interaction, be it physically or virtually. This is the cutting edge of what is possible for live experiences. Head to The Outernet on London’s Tottenham Court Rd for a glimpse of this exciting future!

The Opportunities and Challenges

Getting to grips with how in-the-moment virtual production works in this context comes with many unique challenges, while opening up a new world of opportunities to do things very differently.

Supercharged Engagement

The creative flexibility is extremely exciting when imagining it applied to the bastions of live experiences – festivals, theatres and arenas. What could happen when using virtual production to create hyper-engaging experiences that interact with the live physical, and digital, audience? “Creating something much richer than just filming or broadcasting a performance – not just simply watching people on stage. It’s not about trying to re-create a live experience, it’s about ripping up the rulebook and doing something new” says Tishura Khan, Creative Technology Manager at the Outernet.

It’s possible to create a live environment that responds to audience interaction in realtime, extending the set to blur the lines between virtual and real for a fully immersive experience. Referring back to classic science fiction, applied to the real world, imagine if you could create a space that changed colour based on the audience’s mood or if we added other senses, such as gesture recognition and aroma?

When live performances in Stratford-upon-Avon were suspended due to the pandemic, the Royal Shakespeare Company, Audience of The Future and University of Portsmouth adopted virtual production techniques and motion capture to create Dream, inspired by A Midsummer Night’s Dream. Watched by 70,000 people in 151 countries, Dream explored how audiences could experience live performances in the future. Set in a virtual forest, actors were able to interact with viewers watching at home, expanded by music created in realtime based on their movements. Pippa Bostock, Business Director at the University of Portsmouth, was really excited about how viewers at home engaged with the performance, “We saw some really interesting photos trending on Instagram and Twitter, of how people had set the scene for their own Dream experience, with plants around them, and a glass of wine! People were creating their own immersion, something different, something special”.

Photograph: Stuart Martin/Royal Shakespeare Company

Photograph: Stuart Martin/Royal Shakespeare Company

The restrictions of not being able to perform in front of an in-person live audience opened up the possibilities of blending the physical and digital in realtime to create new and immersive experiences, wherever you are. Pippa agrees, “We always use a challenge-led approach, exploring what you can do using new technologies, to enable people to have experiences that were not previously possible. The best technology should disappear and you are left with the magic. Interactivity is key, and giving the audience the opportunity to experiment and learn”.

Photograph: Outernet London

The Complications of Going Live

Broadcasting live in any context requires that everything runs like clockwork. When you are live, and the “curtains come up”, that is what the audience sees. Full stop. Ensuring the technology can deliver a live performance without a hitch introduces a very different set of challenges. Building a robust tech stack and realtime pipeline is essential. Using a realtime engine to create a digital twin of the environment in order to design and test prior to building or updating the final physical experience is also a very powerful and essential tool. As Tishura states “For live, the technology has to work 100% of the time, otherwise you break the immersion, you have to keep the fourth wall. Therefore you must have a solutions architect and plan for any scenario when things do not work as expected”.

With such a new technology there’s a huge learning curve when it comes to the basics requirement of making sure it actually works. Planning is key, as is having an expert and experienced team to help you pull off the experience. Finding the right talent, with the creative skills, experience in delivering live experiences and a deep technical background is challenging.

Where is the Talent?

Realtime technology experts are scarce because outside of video game development, where the technology is established, using the tech in this way is still very new. To address this challenge the University of Portsmouth is building a new department, The Centre for Creative and Immersive Extended Reality (CCIXR), one of the UK’s first integrated facilities to support innovation in the creative and digital technologies of virtual, augmented and extended realities. Pippa is passionate about ensuring the next generation of talent is ready. “We often say we’re building a unicorn farm because there is a skills gap that we need to fill to enable the industry to grow”. These students are unicorns because of the mash-up of creative techniques and technical expertise that have historically been treated as separate disciplines, in education and industry. This trailblazing approach is designed to create the next generation of digital pioneers, working in roles that are only just emerging, or don’t even exist yet. There is a huge opportunity for innovation coming out of the digital-native generation, where for many of them, using tools such as Unreal Engine and Unity as a creative tool will become second nature.

Photograph: The Centre for Creative and Immersive Extended Reality (CCIXR), University of Portsmouth

The Sustainability Question

The event and technology industry is now on a par with the aviation industry when it comes to carbon impact. The cultural shift accelerated by the pandemic has brought to the top of the agenda, for companies and consumers, our willingness to adopt virtual experiences and refocused attention on the right way to do things, and the wrong ones, and how we can do better. Anna Abdelnoor, Co-Founder of Isla, an independent industry body driving sustainable events to a net-zero future, spoke about how the opportunities and challenges can be opaque, “Virtual production lends itself to hybrid solutions, to fully in-person events which reduce the impact of travel and is, therefore, more sustainable, which unfortunately also makes measuring sustainability very complicated”.

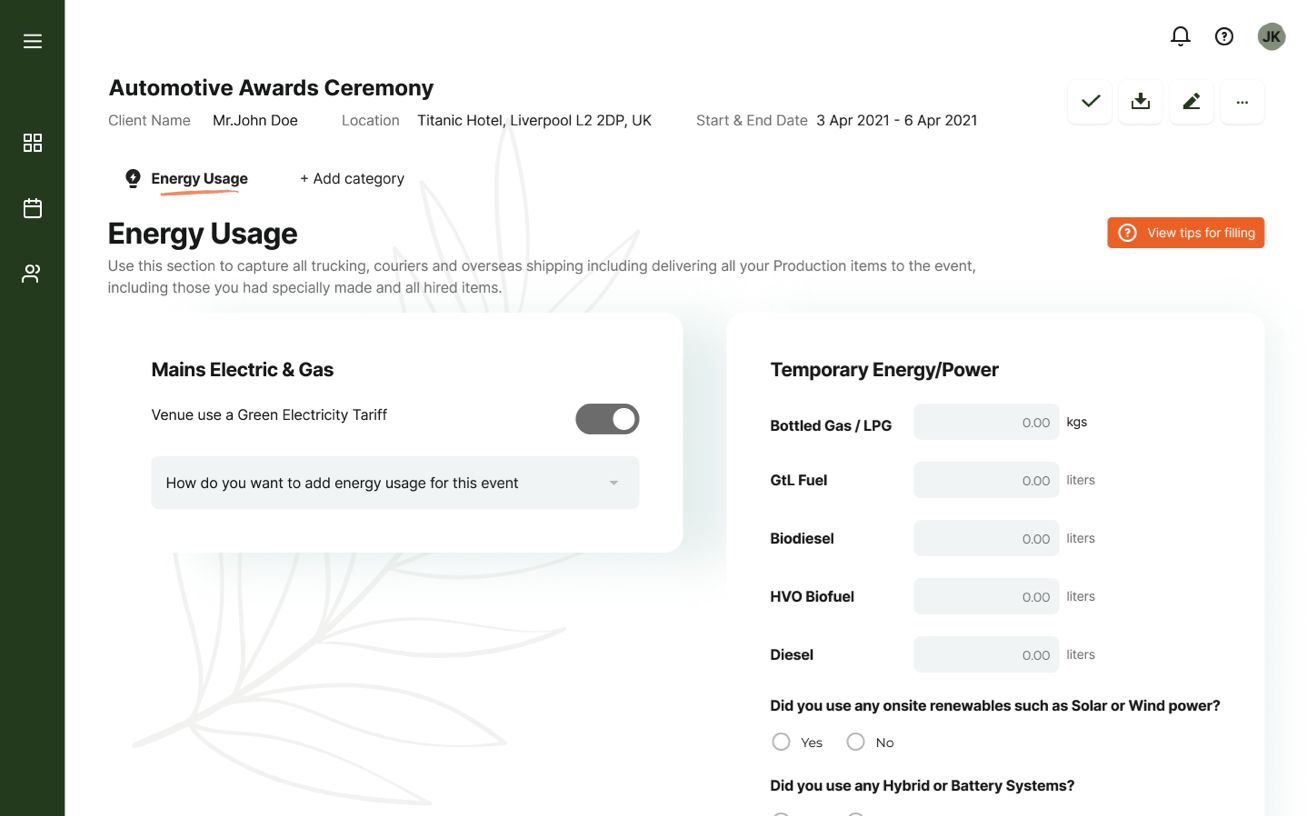

Virtual production as a sustainable solution, as Anna says, is “up for debate”. Anna believes that the first step is being able to measure the impact – venue energy emissions, temporary power sources, travel and accommodation for attendees and crew, the choice and life cycle of materials, food waste footprint, production and transport costs. To get ahead of the curve, Isla has launched TRACE: a carbon measurement platform for sustainable events.

Photograph: Isla

How we use virtual production to drive down carbon emissions must revolve around understanding these challenges, and lots of integrated solutions. Designed to track, manage and connect live experiences using a common digital infrastructure, Imagination developed and launched XPKit, a global SaaS-based customer experience toolkit, based on its decades-long experience designing and building live experiences and destinations for major brands. Integrating platforms like these in the future is going to be key to enabling companies to measure and optimise their carbon footprint across physical and digital experiences.

Into the Metaverse…

Another key element to consider, with the buzzwords of the moment, such as the Metaverse, NFT’s and Web3, is how realtime technology can be used to enhance and merge physical and virtual experiences in a meaningful and valuable way. Using machine learning algorithms, for example, to automate and adapt experiences in realtime based on audience input or generating personalised content as NFT’s.

As with all the game-changing technologies that have come before it, we do not know exactly what the short-term vs the long-term outcomes will look like. There is no doubt, however, that as existing talent continues to embrace this, and new talent enters the workforce, it will pave the way for a new wave of spectacular digitally enhanced experiences – and we need time and industry investment to learn, play and grow these skills.

Anton Christodoulou is Group CTO for award-winning experience design company, Imagination.